The Rise of GPT-4

The March 2023 release of Chat GPT-4 signifies a huge improvement in deep learning. It is described by OpenAI as a major turning point in the development of language models. Compared to GPT-3 and GPT-3.5, this new version of GPT offers a number of notable improvements. Let’s examine the main distinctions between these models and discover the special qualities of Chat GPT-4.

GPT-4 is the latest milestone in OpenAI’s efforts to expand deep learning. GPT stands for generative pretrained transformer. It is a language model that uses deep learning to create conversational and human-like text. Unlike older versions, it can accept both text and image inputs and output human-like text. For example,with GPT-4, if you upload the picture of a worksheet or a graph, it would be able to scan it and output answers to the questions. GPT-4 was released on March 14, 2023, nearly four months after the company launched ChatGPT to the public at the end of November, 2022.

Difference between GPT-4 and GPT-3.5

- GPT-4 can use image inputs in addition to text, whereas GPT-3.5 can only process text inputs.

- GPT-4 will be more capable in terms of reliability, creativity, and even intelligence as seen by the higher performance on benchmark exams.

- GPT-4 can handle longer prompts than GPT-3.

- GPT-4 is significantly better than GPT-3 at processing programming instructions.

- Users can tell GPT-4 how they would like it to respond with explicit instructions, but GPT-3 would respond in a uniform tone and style.

- GPT-4 is trained to limit the possibility of harmful responses and refuse to respond to requests for disallowed content

Only those who pay to ChatGPT Plus, which gives users access to the language model for $20 per month, will have access to GPT-4 text-input functionality. There will be a user cap even with this subscription, so you might not always be able to access it when you want to.There is a free way to access GPT-4’s text capability and it’s by using Bing Chat(Microsoft’s chatbot, which runs on OpenAI’s most advanced LLM — GPT-4).However, features like visual input weren’t available in Bing Chat, so it’s not yet clear which features are integrated and which aren’t.

Visual Inputs

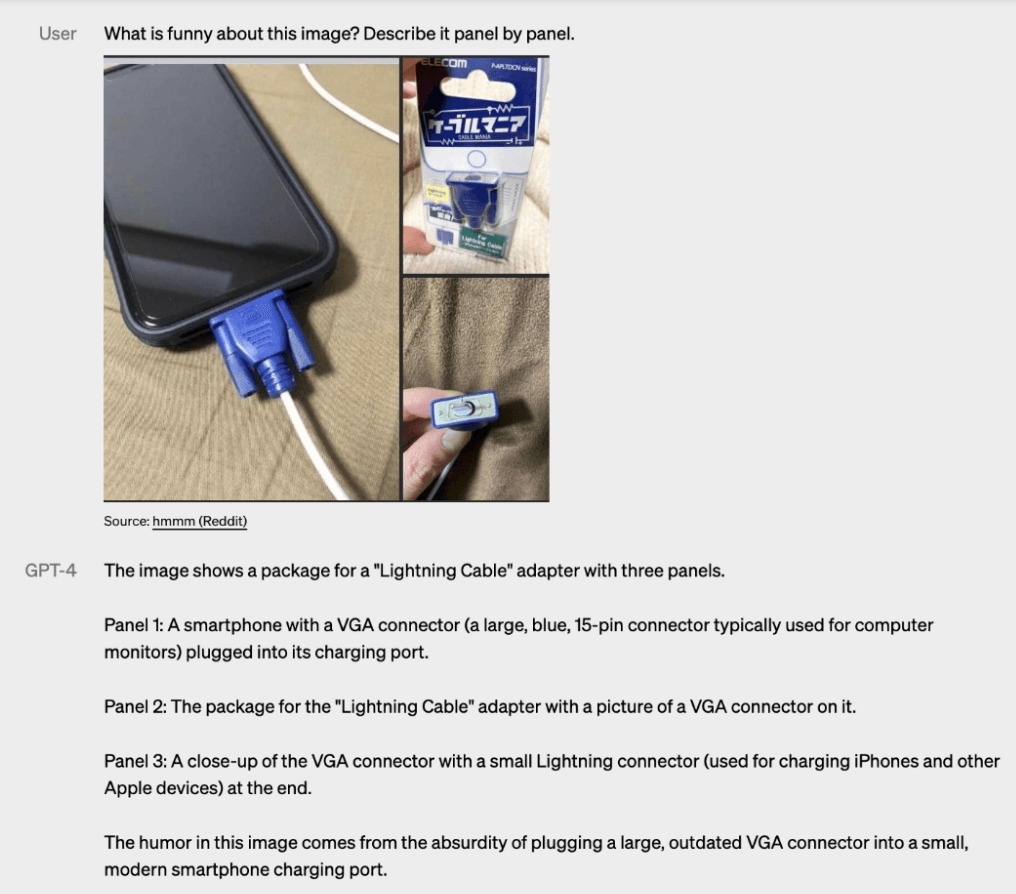

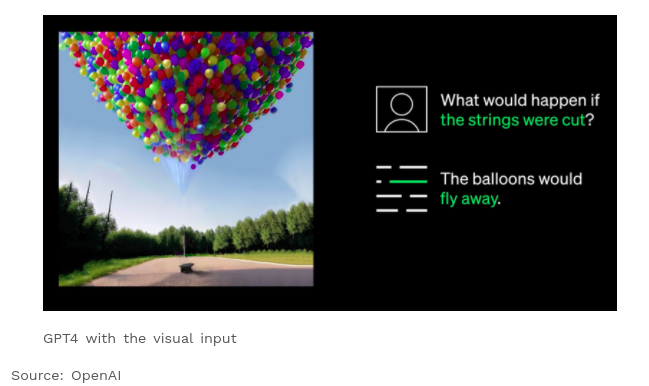

GPT-4 can accept a prompt of text and images and it generates text outputs (natural language, code, etc.) given inputs consisting of interspersed text and images over a range of domains including documents with text and photographs, diagrams, or screenshots .

Eg:

- GPT-4 Understanding the visual input and producing text output

- GPT-4 conducts an analysis on the given link and provides a precise answer to a related question about the content

Training process

Similar to previous GPT models, the GPT-4 model was trained to predict the next word in a document and was trained on publicly available data (such as internet data).The data is a web-scale collection of information that contains correct and incorrect solutions to mathematical problems, weak and strong conclusions, contradictory and coherent statements, and represents a wide range of ideologies and ideas.So when prompted with a question, the base model can answer in many different ways, which may be far from the user’s intentions. To align it with the user’s intentions within the protections, we refine the model’s behavior using learning with human feedback (RLHF).RLHF is an additional layer of training that uses human feedback to help GPT-4 learn the ability to follow directions and generate responses that are satisfactory to humans.

How close is GPT-4 to the Artificial General Intelligence Model ?

When a model reaches this stage, it can think like a human. In particular, it refers to the situation in which a model can carry out any intellectual work that a human can. Learning, reasoning, problem solving, understanding, emotions, etc.According to OpenAI CEO Sam Altman, it’s not even close. ChatGPT can generate context-sensitive text, but it does not understand the topics covered. The information it shares comes from the patterns in the text data it is trained on.Its main goal is to produce text and make that material better. ChatGPT lacks human-like cognitive capacities and is unable to “think” by itself. Additionally, it lacks “common sense”. This is clear from some of the internet chats that people have uploaded, where there is no logic to the conversation. So we’ll have to wait for a while for a true AGI model. We can make use of what ChatGPT is good at offering, which are text conversations that can instruct us.