Biology to AI: Evolution of Neural Networks

Once upon a time, in a scorching summer of Kerala, there was a little boy in a little village who lost his little mind while looking at the number of pages of books he had to devour before the day of exam. Desperate and confused, he fantasized about a world where little boys and girls don’t need to mindlessly eat the stanzas of Malayalam poems or countless equations of mathematics. What if there was a genie who can swallow entire poems, equations, books, or dictionaries and provide answers in our human language to any knowledge available in this world??? But all of this was just a fantasy, right? The comedy is that all of us had similar kinds of fantasies where we have an entity which can answer any question of arts, science or technology, and generate any kind of art.

But the twist is that we have these magical tools now in our hands which can answer nearly all available knowledge in the history of humanity, whether it is culture, politics, psychology, games or technology. It can write poems, draw pictures or present speeches. It can even drive a car on its own. We call this magical reality with the name Artificial Intelligence. But how are all of these possible for an object which doesn’t have a biological body? But one thing is pretty sure that it has a ‘brain’ of its own. And this brain is made up of Neural Networks just like our brain. Are you interested in how humanity was able to achieve this unbelievable herculean task of creating a non-living brain and making it do things for us? So in this blog, we are trying to delve into the incredible story of this inorganic brain, aka Artificial Neural Networks, how our ‘brain’ inspired its design, its capabilities, possibilities, and inevitably the challenges it harbors for humanity!🤗🤗🤗

Genesis of AI and Artificial Neural Networks

Nature: Mother of all Inventions

“It is the marriage between the soul with nature that makes the inellect friutful, and gives birth to imagination!🏞️🍃 🧘”

Henry David Thoreau

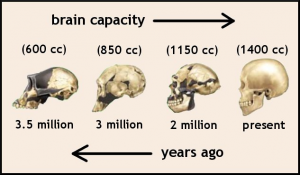

The birth of human innovation can be traced back to our by-birth connection to nature, which is not surprising since our brain is evolved to learn and adapt in this natural world.🦅✈️

The birth of human innovation can be traced back to our by-birth connection to nature, which is not surprising since our brain is evolved to learn and adapt in this natural world.🦅✈️

Throughout history, innovations have been always modeled on or inspired from natural phenomena and one example is how birds were an inspiration for airplanes. Origin of Artificial Intelligence and Artificial Neural Networks were no more different as they were originally inspired from Biological Intelligence and brain.

Rise, Decline and Resurrection of ANN

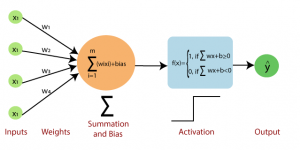

The strength and beauty of ANN stems from the fact that they are mimicking the fundamental block of biological intelligence, which is none other than Neuron. Genesis of Artificial Neurons can be traced back to the postwar period when scientists began to explore the idea of creating a machine which could simulate brain activity.

Earlier in the 1950s, a psychologist named Frank Rosenblatt designed a mathematical neuron, which was called Perceptron. It was based on the way the brain cells work, learning and making adaptations depending on the information they get. The Perceptron was capable of classifying simple images. But the research in neural networks went into decline since they were incapable of classifying complex pictures. Literally, an “AI Winter”!!!🥶🥶🥶

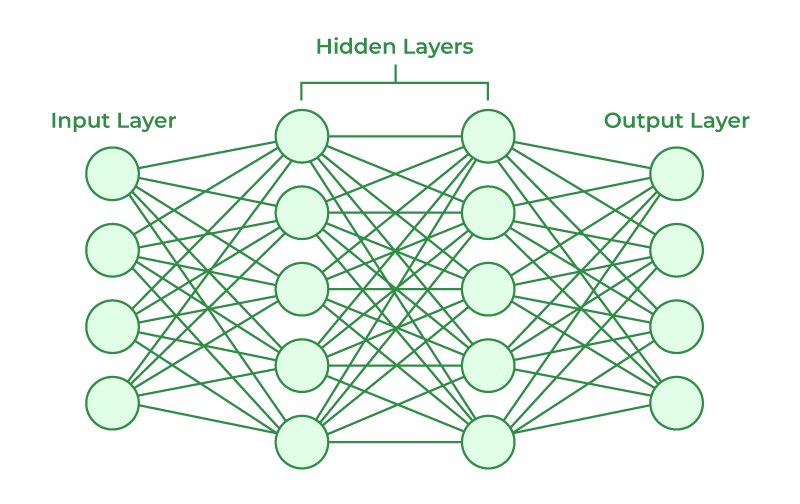

Deep Neural Network with input, output and hidden layers. Hidden layers recieves inputs from input layer, apply transformations and output it as predictions of the Network.

The AI field these days is mostly led by Deep Neural Network architectures, including Convolutional Neural Networks, Recurrent Neural Networks, and Transformers. Generative AI models, like GPT-4, Midjourney, Whisper, Stable Diffusion and SORA, is a great moment for technological world. They are able to write top-notch text, create speech, images and even videos which is almost the same as human.

SORA: a diffusion Transformer model which generates ultra realistic videos

Biological and Artificial Neurons: A brief comparison

There are several similarities between biological neurons and artificial neurons, and essentially these two versions of neurons make up a neural network that provides the basis decision making.

Some of the similarities between biological neurons and artificial neurons are:

- Input: In the biological and technological worlds, both neurons receive action potentials or inputs from other neurons/sensors. A natural neuron is a electrochemical system, and an artificial neuron is a numerical system.

- Activation Function: Activation function in Neural Network helps to learn complex patterns inherent in data. Activation function, along with linear function helps to determine the output based on the inputs they receive. Its biological equivalent is action potential which governs the flow of information.

- Output: Output of biological neurons takes the form of chemical signals and it moves towards the next cells via synapses. In the artificial counterparts, outputs passed to the next neuron or network output in the form of numerical variables.

- Learning: Both the brain and Machine Learning models learn from the experience. Experience of Machine Learning models and ANN are commonly known as ‘data’. Network adjusts according to these experiences using feedback from the output errors.

- Network structure: Neurons generally don’t work in solitude and instead form a network, aka Neural Network. Different kinds of constellations are formed based on applications or context. Such practices are visible in Convolutional Neural Networks to work with the images or Recurrent Neural Networks to handle sequential data. Likewise, the auditory cortex and the visual cortex are two brain regions, where the former is specialized for audio and the later specializes on visual information.

Why Neurons are not Neurons!!!

Although they get inspiration from real neurons, artificial models should not be fully defined as them. Therefore it is intuitive that a number of the man-made machines does’t have the same internal working principle of the biological systems that are situated in the natural environment. Perhaps, a bird’s and an airplane’s core parts aren’t the same at all, but they both make use of the principle of aerodynamics.

ANN is arranged as layers of Neurons and the brain on the other hand comprises billions of interconnected neurons.

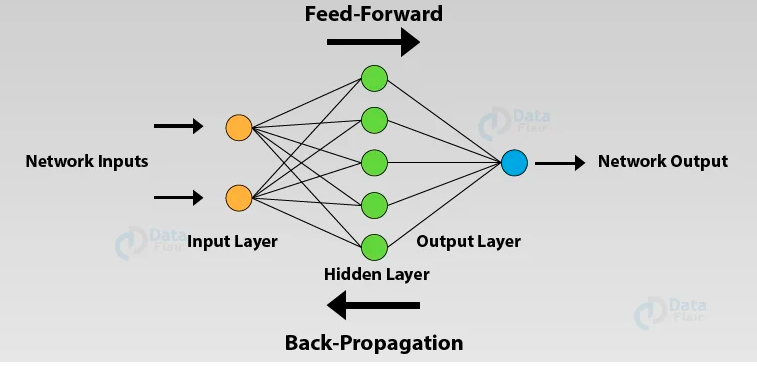

Brain learns through the phenomena called ‘synaptic plasticity’. Synaptic plasticity helps the neurons to make or break connections. Whereas strength of neuronal connections in ANN is determined by weights. ANN adjusts the weights of neurons according to the experiences and this process is known as ‘backpropagation’[4]. As the name suggests, backpropagation is the process of propagating back to the network. What we propagate back is nothing other than ‘errors’. ANNs are masters of making ‘errors’ and they master anything by mastering the art of making errors and adjusting accordingly.

Why are we better???

“The human brain is by far the most complex physical object known to us in the entire cosmos!😎”

Owen Gingerich

Even though the AI systems have gone long distance in the realm of intelligence, it is still a baby when compared to its natural counterpart.

Here are some advantages of Biological Neural Networks(BNN) over ANNs:

- Complexity: A BNN constitutes a network of billions of interlinked neurons that communicate with one another through the means of electrical and chemical signals, while the ANNs consist of the simple layers of artificial neurons communicating with each other through simple mathematical functions.

- Adaptability: Let’s assume that you have an identical twin who has been brought up in an entirely different country. At this age both of you look almost the same, but your habits, language you speak, ideologies you hold, challenges you can face, skills you possess, everything will be different. The appreciation for these differences can be dedicated to your ‘neural connections’. Even though both of you hold the same brain structure, connections between its neurons are now differently tuned according to the requirements of your life. This ultimate adaptability roots from the flexibility in making connections between different neurons. Whereas a large neural network like GPT4 has comparatively rigid architecture. Even if it can tune its weight to make predefined connections stronger or weaker, it doesn’t have the freedom of the brain in this arena.

- Generalization: Biological NNs are able to generalize their knowledge from one context to another in diverse areas of life, ANNs are still not advanced at generalization.

- Energy Efficiency: Brain is one of the most metabolic organs in our body, still it utilizes very minimal energy when compared to AI systems.

The brain is so sophisticated and far away from replicable that no matter how advanced the ANN is. Brain serves as an organ that is capable of completing complicated mental tasks, and resolves them in such situations as emotional, physical and environmental issues and creates masterpieces all over the world no matter if it is arts, games, culture, philosophy, science or technology.

“If the human brain were so simple that we could understand it, we would be so simple that we couldn’t!!!🤭”

Emerson M. Pugh

The main reason for this gap in the performance of the brain and Artificial Neural Networks (ANN) is that ANN architecture is based on the insufficient knowledge of the brain at the beginning of the 20th century. The brain consciousness is more complicated than what one can expect from a group of cells interacting with each other.

While neuroscientists today do not have an answer for the reasons behind the tremendous phenomena like “consciousness”, there are some studies suggesting that non-classical physics phenomena like “quantum entanglement” among brain cells might be behind the scene. As time goes by and advances in neuroscience are made, it is possible we could find special traits of the brain that can be used to improve AI technology.

ANN vs BNN: Why AI is the Future of Intelligence?

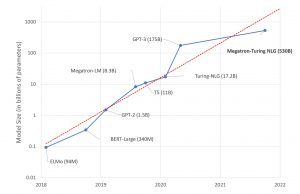

Now, we have figured out that the Biological Neural Networks (BNNs) outperforms the ANNs in many aspects. However, we are not certain whether this will always be the case since there are some per se benefits for it than BNN has. Thus AI has the potential to turn the world upside down if it can overcome the mentioned drawbacks.

Below are a few benefits that ANNs offer in comparison to BNNs:

- Speed and Efficiency: Due to the chemical nature, Biological Neurons are very slow when compared to their artificial counterpart.

- Scalability: Brain size is limited by the skull. The size of ANNs on the other hand can range from a single chip, or thousands of storage and processing units which can be distributed around the globe.

- Accuracy: The real purpose of human being’s brains is not the mathematical precision that gives birth to prejudiced feelings and inaccurate reflection of objective reality. Unlike the subjective emotional brain, ANNs are objective by nature and hence can record information more precisely, at least those that scheme for large amounts of data.

- Reproducibility: ANNs, by their mathematical or computational nature, are highly replicable unlike its biologically constrained counterpart.

- Energy supply: Brain is restricted by the number of neurons that could be fired simultaneously as the unlimited power sources are not achievable by the human body. On the contrary, ANN can consume much more energy because it takes the external electric energy sources as their fuel. As an instance, It is claimed that GPT-3 training consumed the same amount of electricity that a medium-scale city in the world consumes in a week.

- Updatability: The level of human intelligence we are currently at is the byproduct of hundreds of years of evolution whereas to achieve the next level would take another hundreds of years. However, the Artificial Neural Networks have made all these great progress in just a few decades by exploiting deep cores of science and technology.

Recent developments in technology has shown that AI systems can go far above than we expected with better computational resources, neural architectures, datasets and optimization.

Let’s listen to what the “Godfather of AI” Geoffrey Hinton, who did groundbreaking works in Neural Networks, has to say about the present and future of these systems… [8]

Geoffrey Hinton: We have a very good idea of sort of roughly what it’s[Neural Network] doing. But as soon as it gets really complicated, we don’t actually know what’s going on any more than we know what’s going on in your brain.😕

Interviewer: What do you mean by we don’t know exactly how it works?🤔 It was designed by people!😲

Geoffrey Hinton: No, it wasn’t.😉 What we did was we designed the learning algorithm😎 That’s a bit like designing the principle of evolution. But when this learning algorithm then interacts with data, it produces complicated neural networks that are good at doing things. But we don’t really understand exactly how they do those things!!!🥴🥴🥴

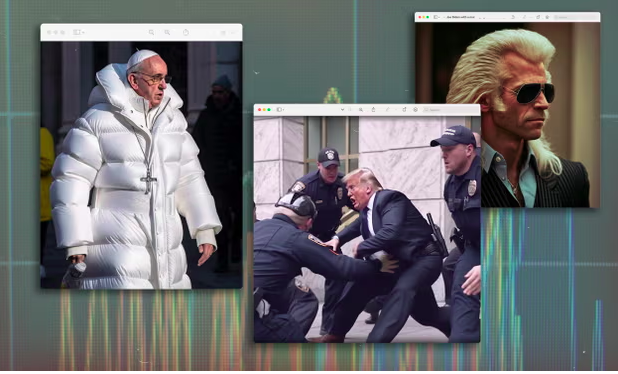

From this conversation, it’s evident that even the so-called “Godfather of AI” doesn’t have a great idea how advanced AI systems do these things since such systems are drastically growing above our understanding. He is then discussing how AI systems can spread convincing misinformation by efficiently learning to do the same. Also, he is seriously worrying that these systems can potentially escape human control by writing their own code and executing it to modify themselves.

As researches in technology are carried out continuously, ANNs will get smarter day by day and will contribute greatly to the solutions to real-world problems. But on the other side of the story, as AI grows faster and faster, people’s eyes widened to the prospect of the downsides on society too.

Year by year, AI generated contents are becoming more fault proof and non differentiable from reality🤕

The AI Apocalypse: Doomsday for humanity???

“The development of full artificial intelligence could spell the end of the human race!😱😱😱“

Stephen Hawking

AI sophistication has resulted in mistrust about the possibility that these systems may run out of control or become unpredictable, leading to harmful consequences. Worries are spreading that automation may cause job shortage, inappropriate uses, or in the worst case, a possibility of robots turning from our servants to masters. Because of that, various experts propose strict regulation and supervision of AI development to prevent potential abuse. In the end, we need to carefully understand and mitigate the risks arising from AI to ensure the positive outcome in this rapidly changing and impact of AI on society…

AI systems always don’t necessarily follow the intention of its human inventors!

Conclusion

In the last few years, AI and Artificial Neural Networks made significant strides in a wide range of areas whether it is text, images, videos, speech or autonomous driving. Still AI systems are far away from our brain in terms of structure and efficiency. But the superfast advancement in ANN architectures can change the fate of AI and humanity since ANNs are not constrained by evolutionary barriers unlike us. So, it is our duty as humanity as a whole to keep an eye on the development of AI based on ethical and moral lines. We are hoping that AI can assist humans in developing a better world for all of us!!!😌😌😌

Bibliography

- Bur, Tatiana, C. D.(2021). Mechanical Miracles: Automata in Ancient Greek Religion. University of Sydney. https://ses.library.usyd.edu.au/handle/2123/345

- Rosenblatt, F. (1958). The perceptron: A probabilistic model for information storage and organization in the brain. Psychological Review, 65(6), 386–408. https://doi.org/10.1037/h0042519

- McCulloch, W. S., & Pitts, W. (1943). A logical calculus of the ideas immanent in nervous activity. The Bulletin of Mathematical Biophysics, 5(4), 115–133. https://doi.org/10.1007/bf02478259

- Rumelhart, D. E., Hinton, G. E., & Williams, R. B. (1988). Learning representations by back-propagating errors. Nature, 323(6088), 533–536. https://doi.org/10.1038/323533a0

- Brown et.al. (2020). Language Models are Few-Shot Learners. ArXiv (Cornell University). https://arxiv.org/pdf/2005.14165.pdf

- Hofman, M. A. (2014). Evolution of the human brain: when bigger is better. Frontiers in Neuroanatomy, 8. https://doi.org/10.3389/fnana.2014.00015

- Kerskens, C. M., & López Pérez, D. (2022). Experimental indications of non-classical brain functions. Journal of Physics Communications, 6(10), 105001. https://doi.org/10.1088/2399-6528/ac94be

- 60 Minutes. (2023, October 9). Godfather of AI Geoffrey Hinton: The 60 Minutes Interview [Video]. YouTube